IESE Insight

Fighting fake news: tips to keep your biases in check

Misinformation is everywhere. Win the battle in your mind with these tips to keep your biases in check and develop more critical thinking.

By Alex Edmans

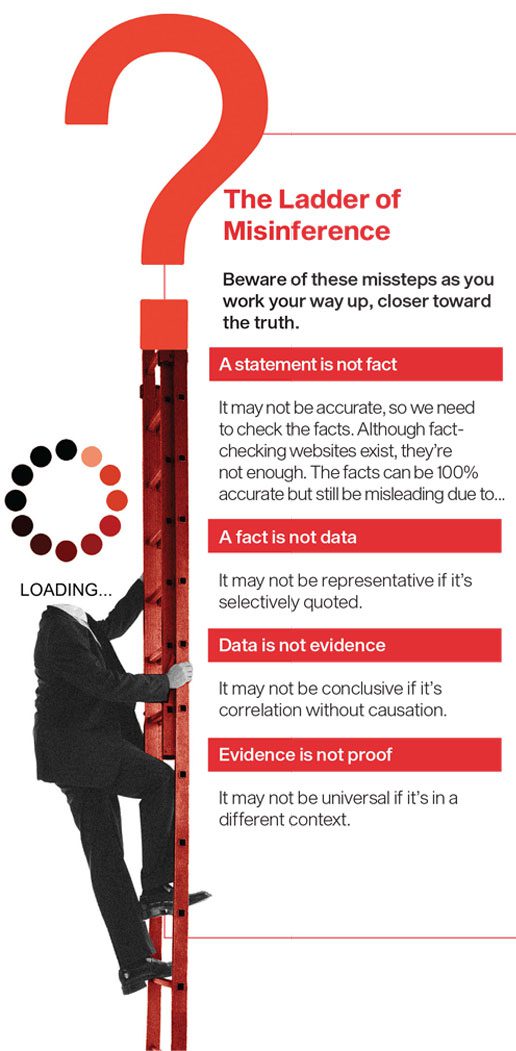

Check the facts. Examine the evidence. Correlation is not causation. We’ve heard these phrases so many times, you’d think they’d be in our DNA — but if that were the case, then misinformation would never get out of the starting blocks.

And yet here we are: Years after the 2016 Brexit vote, a poll of the British public found 42% of them still believed the false claim, plastered on buses in the run-up to the referendum, that European Union membership cost the U.K. £350 million per week, while another 22% were still unsure. This myth, which may have been pivotal in the U.K. voting to leave the EU, persisted despite it being refuted by the U.K. Statistics Authority.

Or consider the so-called 10,000-hour rule, popularized by Malcolm Gladwell in his book Outliers, suggesting it takes 10,000 hours of practice to master any skill. Yet the “rule” he references is based on a study of student violinists and never specified 10,000 hours.

Or then there’s the crushing guilt that exhausted mothers feel for bottle-feeding their babies, worrying they may somehow be harming their child’s cognitive development because of a publicized association between breastfed babies and higher IQ later in life. This ignores a wealth of studies showing little or no effect of breastfeeding on future intelligence, with parental factors counting for more.

In all these cases, what’s striking is that we should know better. We all know that the side of a bus is not a reliable source of information. Gladwell referenced the study that he based his 10,000-hour rule on, and a quick Ctrl+F shows that the original paper doesn’t mention anything even close.

If I share a study on LinkedIn whose findings people don’t like, there’s no shortage of comments on how correlation is not causation — exactly the kind of discerning engagement I’m hoping to prompt. But do I see the same critical thinking when I post a paper that finds their favor? Unfortunately not: People lap it up uncritically.

Why is it that we leave our learnings at the door and rush to accept a statement at face value? The culprit is usually found within: It all comes down to our biases.

In his groundbreaking book, Thinking, fast and slow, Nobel Laureate Daniel Kahneman refers to our rational, slow thought process as System 2, and our impulsive, fast thought process — driven by our biases — as System 1. In the cold light of day, we know that we shouldn’t take claims at face value — but when our System 1 is in overdrive, the red mist of anger clouds our vision.

Kahneman focuses on biases that distort how we make decisions and form judgments. In my new book, May contain lies: How stories, statistics and studies exploit our biases — and what we can do about it, I take a deep dive into the psychological biases that affect how we interpret information, zoning in on the two biggest culprits: confirmation bias and black-and-white thinking.

Confirmation bias

The first culprit is confirmation bias — the temptation to accept evidence uncritically if it confirms what we would like to be true. So, hearkening back to my opening examples, euroskeptics desperately wanted to believe the EU was bleeding the U.K. dry; we’ve all been brought up to believe practice makes perfect; and who wouldn’t trust natural breastmilk over the artificial formula of a giant corporation?

The flip side of confirmation bias is that we reject a claim out of hand, without even considering the evidence behind it, if it clashes with our worldview.

Confirmation bias is hard to shake as it’s wired into our brains. For example, three neuroscientists took students with liberal political views and hooked them up to a functional magnetic resonance imaging (fMRI) scanner. The researchers read out a political statement the participants previously said they agreed with (like “The death penalty should be abolished”) or a nonpolitical statement (like “The primary purpose of sleep is to rest the body and mind”). Participants were then given contradictory evidence that challenged those statements. So, a political statement like “The laws regulating gun ownership in the United States should be made more restrictive” was contradicted with “More people accidentally drown each year than have been killed in gun-related accidents.” Their brain activity was measured. When nonpolitical claims were challenged, there was no effect. But countering political positions triggered the amygdala — the same part of the brain activated when, say, a tiger attacks you, inducing a fight-or-flight response. In other words, people respond to their profoundly held beliefs being challenged like they are being attacked by a wild animal. The amygdala drives our System 1 and drowns out the prefrontal cortex that operates our System 2.

Confirmation looms large for issues where we have a preexisting opinion. Emotions run high for the death penalty, EU membership and breastfeeding. If there’s nothing to confirm, there’s no confirmation bias, so we would hope that we could approach such issues with a clear head.

Black-and-white thinking

Unfortunately, another bias kicks in — black-and-white thinking. This bias means that we view the world in binary terms. We see something as either always good or always bad, with no shades of gray.

The bestselling weight-loss book, Dr Atkins’ new diet revolution, exploited this bias. Most people think protein is good. We learn in school that it builds muscle, repairs cells and strengthens bones. Fat just sounds bad — surely it’s called that because it makes you fat? But the Atkins diet concerned carbs, which aren’t so clear-cut. Before Atkins, people may not have had strong views on whether carbs were good or bad. But as soon as they think it has to be one or the other, with no middle ground, they will latch onto a categorical recommendation.

That is what the Atkins diet did. It had one rule and one rule only: Avoid all carbs. Not just refined sugar, not just simple carbs, but all carbs. You can decide whether to eat something by looking at the carbohydrate line on the nutrition label, without worrying whether the carbs are complex or simple, natural or processed. This simple rule played into black-and-white thinking and made it easy to follow. If the Atkins diet had recommended eating as many carbs as possible, it might still have spread like wildfire. To pen a bestseller, Atkins didn’t need to be right. He just needed to be extreme.

We see black-and-white statements all the time, evidence or no evidence. People claim that “culture eats strategy for breakfast,” citing Peter Drucker. But Drucker never made this claim, and even if he did, it’s meaningless unless he conducted a study taking one set of companies with strong culture and weak strategy, and another set with strong strategy and weak culture, and compared the performance of the two.

Even worthy causes can go astray due to black-and-white thinking that ignores any trade-offs. Governments, investors and companies are racing to Net Zero with some perfunctory mentions of “a just transition,” while 600 million people in Africa have no access to electricity and nothing to transition from. In too many cases, nuance is missing from the conversation.

Becoming a better lie detector

So, how do we equip ourselves to navigate the misinformation minefield and win the battle in our own minds?

Recognize our own biases. The first step is to recognize our own biases. If a statement sparks our emotions and we’re raring to share it or trash it, or if it’s extreme and gives a one-size-fits-all prescription, we need to proceed with caution.

Ask questions. The second step is to ask questions, particularly if it’s a claim we are eager to accept. Try considering the opposite: If a study had reached the opposite conclusion, what holes would you poke in it? Then, ask yourself whether these concerns still apply even though it gives you the results you want.

Take the plethora of studies claiming that sustainability improves company performance. I would love that to be true, given that most of my work is on the business case for sustainability. But what if a paper had found sustainability worsened performance? A sustainability supporter like me would throw up a whole host of objections:

- First, how did the researchers actually measure sustainability? Was it a company’s sustainability claims or people’s subjective opinions on its sustainability rather than its actual delivery?

- Second, how large a sample did they analyze? If it was a handful of firms over just one year, the underperformance could be due to randomness; there’s not enough data to draw strong conclusions.

- Third, is it causation or just correlation? Perhaps high sustainability doesn’t cause low performance, but a third factor drives both. Tech firms typically score high on sustainability, but there are particular periods in which tech underperforms the market.

Once you have opened your eyes to potential problems, ask yourself if they plague the study you are eager to trumpet. Many sustainability articles use dubious measures of sustainability, consider short time periods and ignore alternative explanations, as I’ve just mentioned.

Consider the source. Think about who wrote the study and what their incentives are to make the claim they did. Many reports are produced by organizations whose goal is advocacy rather than scientific inquiry.

For example, no consultancy will release a paper concluding that sustainability doesn’t improve performance, because this wouldn’t be good for their brand. Any report on CEO pay by the High Pay Centre, a U.K. think tank for fairer pay, will unsurprisingly conclude that CEOs are excessively paid.

Ask yourself: Would the authors have published the paper if they had found the opposite result? If not, they may have cherry-picked their data or methodology.

In addition to author bias is the authors’ expertise in conducting scientific research. Leading CEOs and investors have substantial experience, and there’s nobody more qualified to write an account of the companies they have run or the investments they have made. However, some business gurus move beyond regaling audiences with their own personal war stories to proclaiming a universal set of rules for success. But without any scientific research to test their claims, we don’t know whether the principles that worked for them will work for everyone in general.

Sometimes people will liberally cite “research by the University of Sunnybeach” when it supports their position, yet they would never even think of hiring anyone from the University of Sunnybeach for a job in their own organization. A simple test is this: If the same study were written by the same authors with the same credentials but found the opposite results, would you still believe it? Or would you dismiss it as “what else would you expect from the University of Sunnybeach?”

Stay alert to misinformation

Misinformation is arguably a greater problem now than it ever has been. Anyone can make a claim, start a conspiracy theory or post a statistic — perhaps assisted by generative AI — and if people want it to be true, it will go viral.

But we have the tools within us to combat it. We know how to show discernment. Ask questions. Conduct due diligence. The trick is to tame our biases and exercise the same scrutiny we would apply to something we don’t like when we see something we are all too eager to accept.

READ MORE: This article is adapted from May contain lies: How stories, statistics and studies exploit our biases — and what we can do about it (Penguin Random House, 2024).

READ ALSO: “ESG is dead, long live ESG,” in which IESE Prof. Fabrizio Ferraro discusses ESG with Alex Edmans, who argues that, as important as ESG factors are for companies and investors, they shouldn’t be treated as more or less special than other intangible assets that drive long-term value and create positive externalities for society. Again, he stresses the importance of healthy skepticism and critically evaluating all the evidence before jumping to conclusions.

This article is published in IESE Business School Insight magazine #168 (Sept.-Dec. 2024).